If you’re a longtime Ars reader, you may recall the salad days of our liveblogs. Many sites, including Ars, were longtime users of external services like CoverItLive or ScribbleLive to embed a continuously updating liveblog widget inside a post. Sometimes they worked; other times, the services went down due to massive traffic (first iPad announcement, anyone?). This would knock out multiple tech sites’ streams at once. Sometimes we'd get such a flood of readers, our site would go down in favor of displaying the triumphant moonshark above. We were left to dejectedly take to Twitter to continue up-to-the-minute updates for readers. The situation was far from ideal, but we made things work any way we could.

When Ars moved publishing platforms from Movable Type to WordPress in May 2012, Tech Director Jason Marlin and Lead Developer Lee Aylward realized they would have a lot more flexibility to create a liveblogging tool of their own. This began as an experimental side project, but it grew to become a kind of crowning achievement of the tech team (if I/we do say so ourselves). It's a bastion of stability that leaves behind the flaky history of will-they-won’t-they third-party solutions.

If you’ve ever seen Ars staffers like Jacqui “Fingers of Fire” Cheng lay down a liveblog in the last few months, you know how it works. From the Ars front page, readers click on a post, which has a “view liveblog” button at the bottom. If you click that, you’re taken to a page with a left-aligned column of updates from the reporters on the ground, including pictures, arranged with the most recent update at the top. Important updates pin off to the right.

The liveblog tool is a WordPress plugin that Marlin and Aylward wrote from scratch and it allows multiple authors to enter updates simultaneously inside a WordPress post. The reporters who are attending the liveblogged event are naturally there, as well as at least one offsite staff member who is stationed to select and position photos (more on that in a bit).

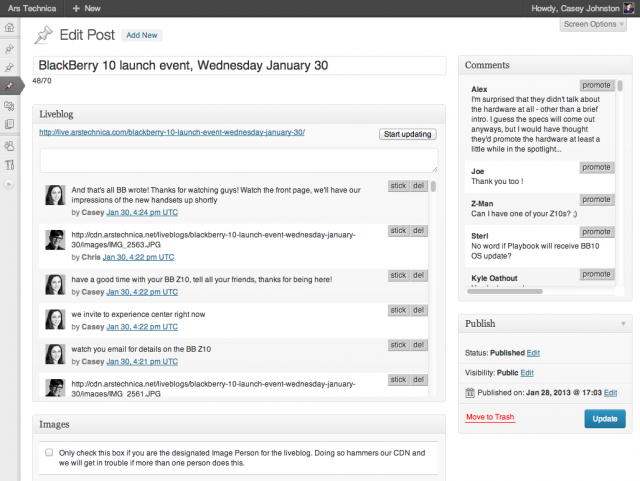

So when a liveblog is going on, a few staffers are camped inside the liveblog post at once. The liveblog interface that reporters see looks like this:

When one of the writers adds an update to the liveblog, the update is sent to a JavaScript file on Amazon’s S3 (Simple Storage Service) called “events.json.” When a reader loads the liveblog in their browser at live.arstechnica.com, the browser pulls down the events.json file. “The file includes every update to the liveblog so far,” said Aylward. “All updates are displayed in the reader’s window immediately.”

Once that file has been fetched, the browser starts checking a second file called “status.json” to see whether the liveblog is live (a “start/stop updating” button inside WordPress allows authors to control this). If the event is live, the browser starts checking a third file named “recent.json” every three seconds. The file holds the 50 most recent updates sent to the liveblog from WordPress. Any unseen updates are then rendered in the reader's liveblog feed. The code for pulling updates to a browser if the event is live looks like this:

function fetch_all() {

$.ajax({

url: "events.json?"+(new Date()).getTime(),

dataType: "json",

success: update_posts,

error: function(req) {

timer = setTimeout(fetch_all, interval);

}

});

}

Aylward states that “lots of JavaScript” is layered into the tool to add timestamps, animations, hyperlinks, and inline images. The code that turns URLs into hyperlinks looks like this:

function linkify(elem) {

var children = elem.childNodes;

var length = children.length;

var url_re = /(https?:\/\/[^\s<]*)/ig;

for (var i=0; i < length; i++) {

var node = children[i];

if (node.nodeName == "A") {

continue;

}

else if (node.nodeName != "#text") {

linkify(node);

}

else if (node.nodeValue.match(url_re)) {

var span = document.createElement("SPAN");

var escaped = $('').text(node.nodeValue).html();

span.innerHTML = escaped.replace(

url_re, '$1');

node.parentNode.replaceChild(span, node);

}

}

}

URLs are fairly simple to transform, but the way images get to a place where the JavaScript code can pull them in is another story.

While the text updates are as simple as shooting from the hip inside WordPress for a reporter, the one responsible for taking pictures has a slightly more complicated setup. This Arsian has a Canon camera (most of the staff have T3is, but there’s also a 7D that gets passed around) tethered to their computer via USB, with a tethering program open.

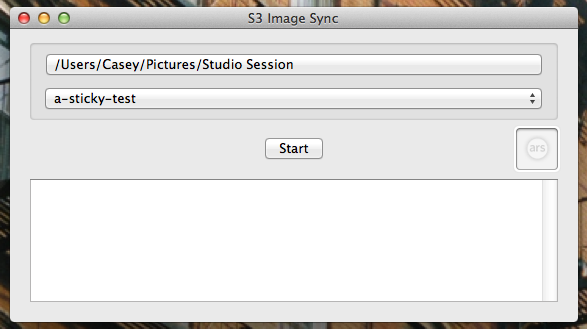

There are a couple of tethering software options: Canon provides a utility on the CD included in the camera’s box called, appropriately, Canon EOS Utility. But programs like Lightroom also have this functionality. A second utility, written internally at Ars called S3 Image Sync, handles the transport of the pictures.

When a camera is tethered to a computer, it auto-imports and saves the image files to a folder. When an image is dropped in, S3 Image Sync grabs the picture, resizes it to a 640-pixel width and adds a watermark to one corner, then syncs that picture to Amazon S3. The WordPress plugin that Aylward and Marlin wrote polls S3 for new images and pulls them into the liveblogging interface. Another remote staffer reviews these images and promotes the best ones into the liveblog, which are then pushed as updates to readers’ browsers.

As a result of Marlin’s and Aylward’s work, Ars has boasted an endlessly stable liveblogging platform for about nine months now. We can’t rule out problems on the end of the reporters on the ground, but for the part of our content management system and our readers, we’ve had scarcely a blip since we swore off third-party solutions. This means readers and staff are rarely graced with the presence of our pet moonshark these days, but we’d say it’s a worthy tradeoff.

reader comments

43