Over the last few years, the meteoric rise of computing power has allowed us to build ever-larger collections of model neurons. Often, these models have been announced with a certain degree of hype, promising performance equivalent to that of an actual animal. In reality, simply throwing more silicon neurons at a problem hasn't brought us much closer to either understanding how the brain works or getting brain-like performance out of our computers.

In a paper published in yesterday's Science, researchers report on a new machine called (perhaps unfortunately) SPAUN, which reinforces the critical role that architecture can play in getting brain-like performance. All told, SPAUN makes do with "only" 2.5 million neurons, but it has them arranged in a specific collection of functional units with populations of neurons dedicated to things like working memory and information decoding. Although SPAUN isn't as flexible as a real brain, it handles a number of tasks about as well as actual humans.

Computers operate in binary, through huge collections of switches that can be in either "on" or "off" states. Real neurons simply can't operate the same way. Although they can have spikes of activity where a voltage change propagates down the cell body, that voltage change is transient—things quickly return to their ground state. Instead, neurons convey information through the pattern and timing of the spikes they create.

To begin with, we can't directly map neuron behavior to silicon. We have to build a model of spiking neurons in code and then link those models into collections of functioning neurons.

But simply throwing together a large enough collection of these model neurons won't get you a brain. An actual brain is composed of functional units that specialize in a process, such as the visual cortex, which helps with object recognition. These structures tend to have neurons with specific spiking properties and connect with a limited number of other structures that they can share information with. This lets a single structure supply information to a variety of processes. For example, only a small area of the brain is dedicated to recognizing written letters and digits, but it can feed what it recognizes to a variety of other structures that can do different things with the information.

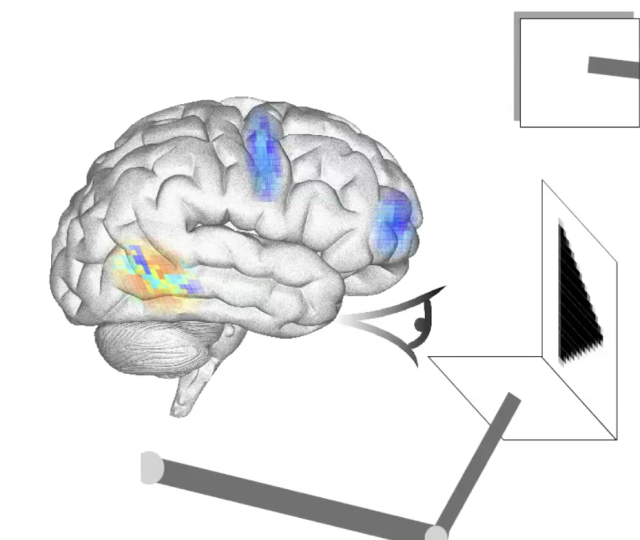

The creators of SPAUN have put together an architecture of functional units, with each unit consisting of a population of modeled spiking neurons. SPAUN takes input from a simple camera, and has a unit that encodes what it sees into spikes. Those spikes are then compressed into a set of key features which can be fed into a recognition system. Another connection of neurons handle task-specific processing and convert the results into a desired action, such as "draw the digit '2.'" From there, further functional units convert that to specific mechanical motions, allowing a robotic arm to output the intended result.

Two key features hang off this otherwise linear arrangement of processing units. One is a collection of working memory neurons, which allows the system access to short-term storage of things like lists of numbers. The other is a task-selection unit. This allows the authors to send a specific instruction—in SPAUN's case, something like "A2"—that the system uses to determine what to do with any subsequent input. Once a task is identified, this unit configures the remaining regions so that they perform specific actions. It essentially reconfigures the flow of information and how each input is used by the subsequent functional units.

The system is limited, in that the task selector can only handle a number of pre-specified tasks. But it's also extremely flexible. The image recognition portion, which picks out letters and digits, can feed what it "sees" into a wide variety of tasks. In the same way, the working memory only stores numbers, but the processing units can use the numbers held there for a range of purposes.

To demonstrate SPAUN's flexibility, the authors programmed the task selector to handle eight different tasks. Some of these were pretty basic, like recognizing a digit or adding two digits shown in succession. But the tasks got progressively more complex, like identifying the position of a digit in a list. At the higher end of task complexity, it could make basic inferences (given 2, 4, 6; and 3, 5, 7; how would you complete 1, 3, x?). In its own way, it could learn. Given lists of three numbers, it had to figure out which ones were associated with the highest reward without being given any information.

SPAUN wasn't 100 percent accurate for all of these tasks. For example, it was only 94 percent accurate with character recognition. But given the same resolution characters that SPAUN was working with, humans are only 98 percent accurate.

Beyond its ability to solve these specific tasks, the key thing about SPAUN is that it wasn't limited to solving these tasks. As more things are added to the task recognition system, SPAUN's abilities should be able to expand accordingly. And because of its modular nature, additional features could be added to other aspects of the system—such as the ability to speak the output rather than writing it.

That said, the authors recognize that the system is pretty limited as it stands. First and foremost, though it can learn how to adjust its responses within a given task, it can't actually learn new tasks. And it isn't an especially accurate model of the brain. The spiking behavior of its model neurons, for example, is far more regular than those of actual living neurons, making the traces of its activity look bizarrely uniform (at least to someone used to observing the output of biological systems).

But none of these problems are obviously insurmountable. The model neurons could eventually be replaced with ones that operate a bit more like the biological ones, and it's possible to envision creating a task-learning unit that feeds its results into the task-selection unit. Since we've already built models with over 1,000 times as many neurons, SPAUN may only just be getting started.

Science, 2012. DOI: 10.1126/science.1225266 (About DOIs).

reader comments

42