Apple's new Fusion Drive technology is a bit of a mystery. Dismissed by some as just another simple cache mechanism, it's drawn a lot of attention in the weeks since it was announced. (And it wasn't until the end of last week that Macs equipped with Fusion Drives actually began shipping.) Ars had its hands on a Fusion Drive-equipped Mac Mini for a few days now, and we set ourselves to the challenge of solving the mystery. We spent several days elbows-deep in the technology, attempting to determine how Fusion Drive (henceforth referred to as FD) works and what effect it has on overall system performance. We also wanted to see what happens with installing Windows through Boot Camp, and what happens when Fusion Drive fails. We've learned a lot in a short time through observation and deduction, and while some questions are still unanswered, we've gotten a lot of outstanding, exclusive info.

To briefly review, FD is a feature announced by Phil Schiller on the October 23 Apple event, debuting in the new Mac Minis and iMacs also announced at the event. FD uses Core Storage to create a single volume out of a solid state disk and a much larger traditional hard disk drive, and then tiers data back and forth between the two. The goal is to deliver the speed of an SSD, while also taking advantage of the large size of the HDD to hold the bulk of your data. In typical Apple fashion, though, the presentation was high on hyperbole but low on actual technical meat. We've done two more peeks at FD, one diving into the impressive hackery done by Patrick Stein (a.k.a. "Jollyjinx"), who discovered that FD appears to work on any Mac running 10.8.2, not just new Minis and iMacs. The other detailed preliminary hands-on results with our FD-equipped Mac Mini.

Ars also got ahold of Apple's service technician training document for Fusion Drive, thanks to a helpful anonymous source. The document sheds more light on the technology, confirming several things we speculated about. In particular, it notes late 2012 21" and 27.5" iMacs come with flash "storage modules," similar to the ones already seen on MacBook Airs and Retina MacBook Pros. Late 2012 Mac Minis use a 2.5" SSD instead of a storage module.

The training still makes reference to FD moving "apps and documents" between SSD and HDD, though testing has shown this isn't quite true. FD operates below the files, actually moving Core Storage blocks (which seem to be referred to internally as "chunks," as we'll see). Apple's presentation of the technology as moving "apps and documents" makes perfect sense, though: apps and documents are easy to explain in a quick launch presentation, while an actual discussion of files versus file system clusters versus Core Storage blocks versus disk blocks would be difficult to cram into a single slide.

We've been poking and prodding at a Fusion Drive Mac Mini all weekend, and we've dug up lots of additional juicy information on FD's real-world functioning. We also dove into what happens when things go wrong.

A glass half-full

Commenters in the other articles—particularly those who only skimmed the texts—have wondered at length why we're spending so much (virtual) ink covering Fusion Drive. Isn't it just a plain caching solution? Isn't it the same as Intel SRT? Hasn't Linux been doing this since 1937?

No, no, and no. Intel's Smart Response Technology is a feature available on its newer Ivy Bridge chipsets, and it allows the use of a SSD (up to 64GB in size) as a write-back or write-through cache for the computer's hard drive. One significant difference between FD and a caching technology like Intel SRT is that Fusion Drive alters the canonical location of the data it tiers, moving it (copying it, really, because we don't see a "delete" file system call during Fusion migrations, as we'll demonstrate in a bit) from SSD to HDD. More importantly, with FD, as much data as possible goes to the SSD first, with data spilling off of the SSD onto the HDD. Picture Fusion Drive's SSD like a small drinking glass, and the HDD is a much larger bucket, below the SSD. When you put data onto a Fusion Drive, it's like you're pouring water into the glass; eventually, as the glass fills, water slops over the side and begins to be caught by the bucket. With Fusion Drive, you always pour into the glass and it spills into the bucket as needed.

On the other hand, caching solutions like SRT algorithmically determine what things should be mirrored up from HDD onto SSD. Even though the SSD can be used as a write cache, the default location of data is on the HDD, not the SSD. In caching, the HDD is the storage device with which you interact, and the SSD is used to augment the speed of the HDD. In Fusion Drive, the SSD is the device with which you interact and the HDD is used to augment the capacity of the SSD.

I'm definitely not going all starry-eyed over Fusion Drive, and it's not a revolutionary new thing that will make your computer shoot rainbows out of its USB slots while curing cancer and making sick children well again. However, as we'll see, Fusion Drive is a transparent tiering technology that simply works. It's that seamless always-on functionality that makes it newsworthy—you buy a computer with Fusion Drive enabled and you don't need to install or configure any additional hardware or software in order to enjoy its benefits.

Mr. Fusion

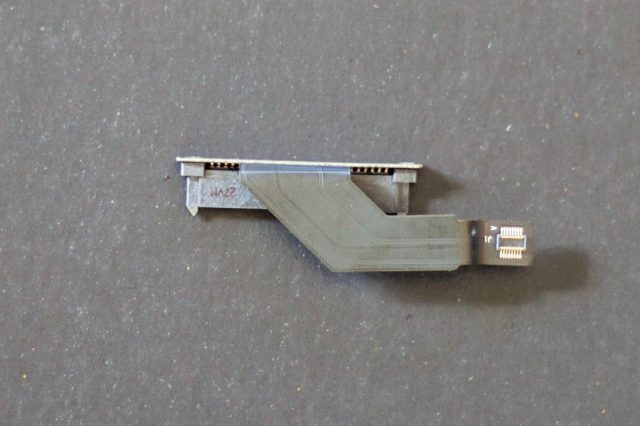

To verify what Apple says in its training, and because we just can't leave well enough alone, we took screwdriver and spudger to our perfectly good Mini and broke it down into its component parts. We wanted to see for ourselves exactly what kind of SSD is in there.

It's a Samsung 830-based MZ-5PC1280, reportedly the same type of SATA III SSD shipping with current non-retina Macbook Pros, though apparently with updated firmware. It comes mounted in the Mini's lower HDD bay, connected to the L-shaped motherboard with an adapter.

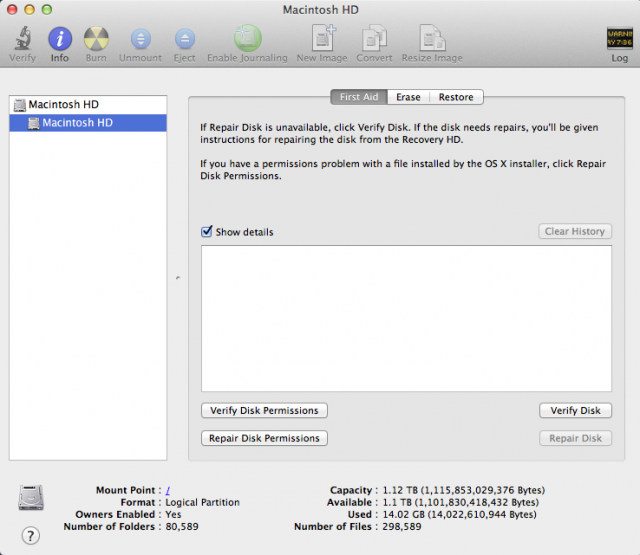

Though there are indeed two physical disks, FD unifies them into a single volume. From a user's perspective, it's a single disk, and you interact with FD volume in exactly the same fashion as you would a standard disk. No configuration or fiddling is required.

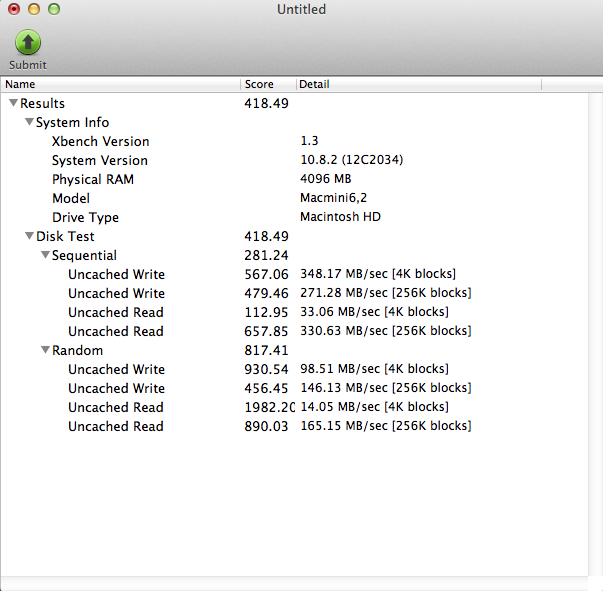

Performance is right where you'd expect it to be for a mid-tier SSD, with XBench reporting numbers that are good, but not outstanding. Tellingly, there's no way to bench the HDD—the benchmark applies itself to the disk subsystem, and Core Storage ensures that it interfaces with the SSD.

To actually see the SSD and the HDD separately, you have to go a little lower and pull in the command line diskutil command, which shows the Mini's SSD and HDD are definitely two separate physical devices:

LeeHs-Mac-mini:~ leeh$ diskutil cs list CoreStorage logical volume groups (1 found) | +-- Logical Volume Group 31CBA650-4ABC-4DA6-AA73-6D96B36693E2 ===================================================== Name: Macintosh HD Size: 1120333979648 B (1.1 TB) Free Space: 0 B (0 B) | +-< Physical Volume F4A50F9C-841D-4C6D-AFC4-6A10560E0717 | ---------------------------------------------------- | Index: 0 | Disk: disk0s2 | Status: Online | Size: 120988852224 B (121.0 GB) | +-< Physical Volume C86789FD-1978-453A-BCA7-81DC05AA66C7 | ---------------------------------------------------- | Index: 1 | Disk: disk1s2 | Status: Online | Size: 999345127424 B (999.3 GB) | +-> Logical Volume Family AA92FDF8-F962-4DE3-AC2F-1B6ABF22AA22 ------------------------------------------------------ Encryption Status: Unlocked Encryption Type: None Conversion Status: NoConversion Conversion Direction: -none- Has Encrypted Extents: No Fully Secure: No Passphrase Required: No | +-> Logical Volume B97C9558-14FC-4BFC-B413-AFB292BD29F0 ----------------------------------------------- Disk: disk2 Status: Online Size (Total): 1115853029376 B (1.1 TB) Size (Converted): -none- Revertible: No LV Name: Macintosh HD Volume Name: Macintosh HD Content Hint: Apple_HFS

If you're a casual user and don't care about the internals, there is nothing that you need to do to make FD "work." You power on the system, log on, and use it. A Fusion Drive-equipped Mac leaves the factory with the operating system and all of the pre-installed applications on the SSD side, so the system is just as snappy and responsive as if it were an SSD-only Mac. Boot times out of the box on the Mini were impressively quick, with the system going from button-press to log-on prompt in about seven seconds by my stopwatch. Apple's documentation indicates that FD will always keep the operating system and other critical files pinned to the SSD, so this snappiness should persist throughout the Mac's life.

Digging deeper

That's all fine and good for a brand new system, but we wanted to know how FD handles things during normal usage. One of the first things many folks are going to do to a new Mac is migrate their existing data onto it, and so we attempted to find out how FD behaves when something like that happens. Initial reports from Anandtech indicated that FD keeps a 4GB "landing zone" on the SSD, and that all writes were directed to that landing zone. This doesn't appear to be correct. Or, rather, it appears to be partially right, but not entirely so.

Out of the box, the system showed only about 10GB in use. I copied a 3GB file from my NAS down onto the Mini while monitoring the physical drives' throughput with iostat, and all IO occurred on the SSD. Once the copy was completed, I let the Mini sit for about an hour to see if it would move some or all of the file down to HDD, but no move occurred. I copied a second 3GB file and observed the same behavior—the copy landed on the SSD, and seemed to stay there. I followed that up with a large 8GB file, which also landed entirely on the SSD and stayed there. Throughout all of this, iostat showed no activity at all on the HDD.

The 4GB "landing zone" didn't make itself apparent anywhere in the results, so I then decided to emulate a typical "user moving in" type of behavior by filling up the SSD with stuff to see what FD would do. I copied down about 120 GB of files, which would more than fill the SSD and force the system to show me its contingency behavior.

When the copy started, all data went to the SSD, just as before. When the amount of data on the SSD hit about 110 GB (roughly the size of the Core Storage partition on the SSD), IO shifted smoothly from the SSD (disk0 in the iostatoutput below) to the HDD (disk1), without interrupting the copy:

disk0 disk1 KB/t tps MB/s KB/t tps MB/s 915.91 141 125.98 0.00 0 0.00 1016.09 129 127.88 0.00 0 0.00 1014.97 113 111.93 0.00 0 0.00 1011.56 82 80.92 0.00 0 0.00 1016.03 128 126.84 0.00 0 0.00 1016.09 129 127.84 0.00 0 0.00 1013.48 97 95.88 0.00 0 0.00 1024.00 96 95.96 0.00 0 0.00 958.58 48 44.91 1007.30 57 56.04 4.00 1 0.00 1010.16 74 72.92 8.00 24 0.19 1024.00 66 65.92 4.00 2 0.01 1010.53 76 74.95 15.43 7 0.11 974.74 70 66.59 4.00 1 0.00 912.00 82 72.95 4.00 1 0.00 931.33 96 87.21

The copy job proceeded to completion without issue. This was all done over gigabit Ethernet from a Synology DS412+ NAS; note the SSD is able to ingest files at what amounts to line speed (gigabit Ethernet maxes out at about 120-ish MB per second, factoring in a bit of overhead), while the slower 5400-rpm HDD can't write nearly as fast.

I was interested in seeing what FD would do after the job was completed, and the system didn't disappoint. There was a pause of about twenty seconds, and then FD began moving data. Based on the observed throughput and the amount of data moved, it's clear that FD was transferring things from the SSD to the HDD:

disk0 disk1

KB/t tps MB/s KB/t tps MB/s

4.00 1 0.00 1010.35 75 73.90

4.00 3 0.01 1024.00 72 71.94

4.00 1 0.00 1009.97 73 71.90

5.33 3 0.02 932.87 78 70.99

8.00 38 0.30 1011.09 79 77.91

4.80 10 0.05 969.86 56 52.98

0.00 0 0.00 999.47 75 73.14

4.00 1 0.00 404.18 88 34.70

30.72 304 9.11 748.71 68 49.66

30.29 295 8.71 484.47 93 43.94

29.43 318 9.13 0.00 0 0.00

29.89 316 9.21 0.00 0 0.00

30.78 289 8.68 0.00 0 0.00

20.92 646 13.20 0.00 0 0.00

29.62 468 13.55 0.00 0 0.00

23.18 428 9.70 75.83 46 3.40

29.27 406 11.62 0.00 0 0.00

29.69 239 6.92 4.00 1 0.00

28.89 369 10.40 0.00 0 0.00

29.09 517 14.68 0.00 0 0.00

disk0 disk1

KB/t tps MB/s KB/t tps MB/s

30.12 455 13.39 0.00 0 0.00

28.90 587 16.57 0.00 0 0.00

30.40 25 0.74 69.92 50 3.41

0.00 0 0.00 0.00 0 0.00

13.33 156 2.03 6.67 3 0.02

0.00 0 0.00 0.00 0 0.00

8.00 9 0.07 0.00 0 0.00

0.00 0 0.00 0.00 0 0.00

0.00 0 0.00 0.00 0 0.00

128.00 202 25.21 128.00 202 25.21

128.00 455 56.90 128.00 454 56.78

125.69 359 44.12 128.00 353 44.18

111.18 427 46.39 128.00 367 45.92

128.00 485 60.64 128.00 484 60.52

119.74 667 78.00 128.00 622 77.76

124.13 681 82.54 128.00 659 82.36

128.00 665 83.13 128.00 665 83.13

123.59 597 72.06 128.00 575 71.89

128.00 679 84.88 128.00 678 84.75

123.42 683 82.31 128.00 658 82.25

disk0 disk1

KB/t tps MB/s KB/t tps MB/s

123.56 676 81.56 128.00 650 81.24

128.00 503 62.91 128.00 503 62.91

122.32 506 60.47 128.00 483 60.41

128.00 643 80.38 128.00 642 80.25

122.73 682 81.74 128.00 652 81.50

123.80 685 82.82 128.00 662 82.75

128.00 565 70.64 128.00 564 70.52

122.57 529 63.34 128.00 506 63.28

128.00 555 69.40 128.00 555 69.40

121.91 708 84.28 128.00 672 84.00

128.00 661 82.63 128.00 661 82.63

122.28 586 69.96 128.00 558 69.74

128.00 458 57.28 128.00 457 57.16

108.32 381 40.34 127.76 319 39.86

This was correlated with fs_usage, which showed reads from disk0 and writes to disk1, along with what are obviously Core Storage calls occurring between each:

15:33:27.189432 RdChunkCS D=0x00debd00 B=0x20000 /dev/disk0s2 0.000328 W kernel_task.17601 15:33:27.189468 RdBgMigrCS D=0x0033d8a0 B=0x20000 /dev/CS 0.000367 W kernel_task.17601 15:33:27.190136 WrChunkCS D=0x0aeac500 B=0x20000 /dev/disk1s2 0.000629 W kernel_task.17601 15:33:27.190172 WrBgMigrCS D=0x0033d8a0 B=0x20000 /dev/CS 0.000670 W kernel_task.17601 15:33:27.190531 RdChunkCS D=0x00debe00 B=0x20000 /dev/disk0s2 0.000328 W kernel_task.17601 15:33:27.190568 RdBgMigrCS D=0x0033d8c0 B=0x20000 /dev/CS 0.000369 W kernel_task.17601 15:33:27.191233 WrChunkCS D=0x0aeac600 B=0x20000 /dev/disk1s2 0.000627 W kernel_task.17601 15:33:27.191269 WrBgMigrCS D=0x0033d8c0 B=0x20000 /dev/CS 0.000667 W kernel_task.17601 15:33:27.191638 RdChunkCS D=0x00debf00 B=0x20000 /dev/disk0s2 0.000336 W kernel_task.17601 15:33:27.191673 RdBgMigrCS D=0x0033d8e0 B=0x20000 /dev/CS 0.000376 W kernel_task.17601

The four calls listed, "RdChunkCS," WrChunkCS," "RdBgMigrCS," and "WrBgMigCS," are all referenced in the fs_usage source as being Core Storage operations; the last two are noted in the code comments as referring to "composite disk block migration" calls. This is the actual "tiering" activity that forms the core of Fusion Drive. The blocks being referenced are sequential (on disk0, the process is reading block 0x00debd00, then 0x00debe00, and then 0x00debf00), and the actual chunk size being moved around is 128KB (0x20000 bytes).

The FD migration process ran for a short amount of time (though longer than the iostat output above), then stopped. I totaled up the amount of data copied, and it came out to approximately 4GB.

I repeatedly copied large files to the drive, and the results remained consistent—the copy operations would land on the SSD until it filled, then shift seamlessly over to the HDD and continue until completion. The SSD would then immediately move data off of itself until it had 4GB of free space. If I copied in a 2GB file, it would demote 2GB of data from somewhere else to the HDD; if I copied a 1GB file, it would demote 1GB. If I copied in 50GB, it would smoothly ingest all 50GB, and then demote 4GB of data off of the SSD.

This 4GB buffer is definitely what Anandtech's source meant. FD doesn't keep a constant 4GB "landing zone" if the amount of available SSD space is greater than 4GB; rather, FD keeps a minimum of 4GB free on the SSD.

reader comments

183