It's been five years since the last report from the Intergovernmental Panel on Climate Change, and the organization is currently preparing its fifth assessment report (AR5). These reports provide both an update on what we've learned about the climate in the intervening years and projections for likely future climates based on that new understanding.

Those projections are powered by climate models. Starting with AR4, those projections were based on the work of the World Climate Research Programme. This group identifies the current climate models from a variety of institutions, and runs them under a variety of emissions scenarios. Then, the WCRP collects the results of multiple runs from the ensemble of climate models, and uses that to predict the likely climate change and remaining uncertainties.

You might expect the progress made during the intervening five years would greatly narrow the uncertainties since the last report. If so, get ready for disappointment. A pair of researchers from ETH Zurich has compared the results from AR4 with the ones that will be coming out in AR5, and they find that the uncertainties haven't gone down much. And, somewhat ironically, they blame the improvements—as researchers are able to add more factors to their models, each new factor comes with its own uncertainties, which keeps the models from narrowing in on a value.

The model comparisons are run by the Coupled Model Intercomparison Project, or CMIP ("coupled" refers to treating the ocean and atmosphere as a linked system). CMIP3, confusingly, was a run of the models to prepare for the IPCC AR4. The groups have since synchronized, and CMIP5 will be part of AR5.

The number of changes, some brought about by increased computing power, are staggering. More models are used, including some from entirely new sources. Additional forcings are included in many of them (at the time of AR3, several of the models assumed solar and volcanic forcings were constant). All models now include both the direct effects of aerosols (which reflect sunlight) as well as their indirect effects, including changes in clouds and precipitation. In addition, the models are now run at a much finer spatial resolution, since advances in computing power allow more calculations to be used in each model run.

An exact comparison between the two different sets of models is made challenging by the fact the IPCC has changed the details of its CO2 emissions scenarios. But, as best they could, the authors managed to compare the projections of the two sets of models.

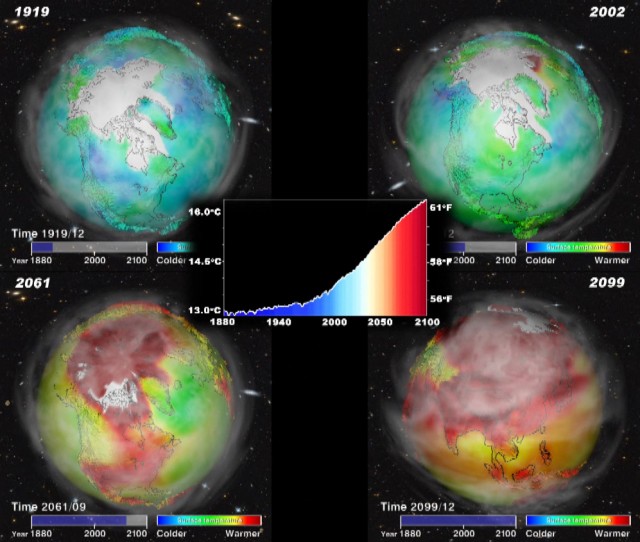

When it comes to temperatures, the CMIP3 and 5 are in rough agreement as to the magnitude and regional details on future warming. But the areas where the models produce inconsistent results is actually larger in the updated version, with an area southeast of Greenland remaining difficult to predict all the way out to the end of the century. Predicted precipitation changes are similar, with areas of uncertain predictions growing as the century wore for both CMIP3 and 5 (they're concentrated around the equator). Although the new models seem to have fewer areas of uncertainty, they now have one sitting on top of a major agricultural area: North America's Great Plains.

How can we improve the models yet have them produce more uncertainty? The authors have a long list of reasons. Some of these are systemic: limited processing time, lack of detailed data to test the models against, and a lack of clear metrics to judge the success of a given model. Others focus more on the nature of climate change itself: forcings we haven't identified, and a degree of natural variation. But the authors suspect that at least some of the problem boils down to researchers using their increased computational capacity to add even more factors into their models:

In contrast to end users, who would define model quality on the basis of prediction accuracy, climate model developers often judge their models to be better if the processes are represented in more detail. Thus, the new models are likely to be better in the sense of being physically more plausible, but it is difficult to quantify the impact of that on projection accuracy

In other words, climatologists are building their models to best reflect what we know about the natural world. They're not building them to be the most effective means of making predictions for the future of specific regions of the globe. And, at least partly as a result, the models scientists use capture a great deal of uncertainty in our understanding of the climate. But that's a problem for policymakers, who could use specific predictions to act on.

In any case, given a similar set of emissions, the new set of models seems to behave very much like the old one, with temperatures rising by over 4°C by the end of the century when emissions continue unabated. Even when taking uncertainties into account, it's difficult to keep that change under 3°C without significant changes to our fossil fuels habit.

Nature Climate Change, 2012. DOI: 10.1038/NCLIMATE1716 (About DOIs).

reader comments

114