STANFORD, CA—Four academics from West Point and Samford University in Alabama set out to answer a seemingly simple question: how would one write a computer program to issue speeding tickets? After all, speed limits are fairly simple—you’re either driving faster than the posted number or you’re not.

In the age of Google and its competitors making fully autonomous cars (and states passing laws to allow them), it’s not hard to imagine fully autonomous law enforcement for traffic violations, either. After all, we already have red-light cameras.

“If anyone thinks it is a simple thing to do, to take a simple law [and convert it to machine-readable code], it is significantly more complicated than one thought,” Woodrow Hartzog, a law professor at the Cumberland School of Law at Samford University and one of the paper's co-authors, told Ars.

Hartzog and one of his co-authors presented a paper (PDF) at the “We Robots” conference at Stanford Law School on Monday. Academics, scholars, lawyers, and technologists gathered to discuss robots, autonomous systems, and how they interact with society—the conference, which is also being live-streamed, will conclude on Tuesday.

The four academics, which included two computer science professors from West Point and an English and philosophy professor also from the military academy, collected real-world speed data and assigned third-year computer science students from the famed military institution to “determine algorithmically whether violations occurred” and to issue “mock traffic tickets.”

What is a speeding law, anyway?

As the academics write:

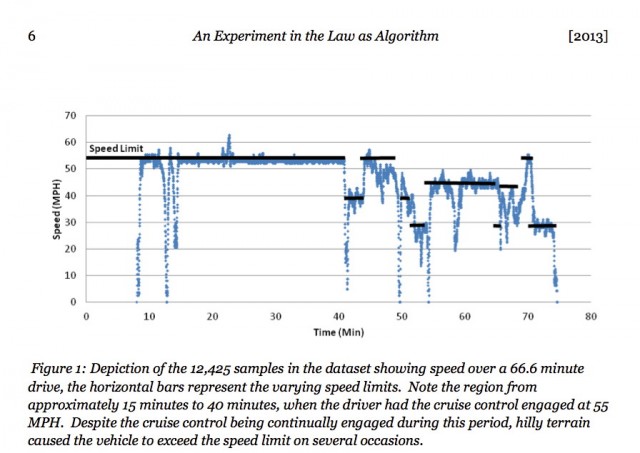

These programmers were provided with real-world driving data extracted from the on-board computer of a commuter's automobile (a late model Toyota Prius) and a second dataset providing manually-constructed, but realistically-derived, speed limit information. Given this data, the first group was asked to implement “the letter of the law” and issue traffic citations accordingly (the datasets are available online). The second group was asked given an additional, carefully-crafted, written specification from which to base their software implementation. Both a computer scientist and an attorney reviewed this specification for accuracy. The specification was also verbally briefed to the third group to further clarify the requirements. The programmers had two weeks to complete the assignment.

Upon completion of the assignment, the professors discussed the programs and their implications with the students. Some students, particularly those tasked with completing the “letter of the law,” found that their programs issued “tickets” numerous times, far more than a real-world human police officer likely would. After all, most cops usually allow a 5-10 mph tolerance window before they’d consider issuing a speeding violation.

The widely-varying interpretations by reasonable programmers demonstrate the human filter (or "bias") that goes into the drafting of the enforcement code. Once drafted, the code is unbiased in its execution, but bias is encoded into the system. This bias can vary widely unless the appropriate legislative or law enforcement body takes extra precautions, such as drafting a software specification and performing rigorous testing.

There were also other issues that came up, such as the frequency of data collection. What if a particular vehicle could be tracked continuously in a way that is not currently possible with a law enforcement official and his or her speed gun?

An automated system, however, could maintain a continuous flow of samples based on driving behavior and thus issue tickets accordingly. This level of resolution is not possible in manual law enforcement. In our experiment, the programmers were faced with the choice of how to treat many continuous samples all showing speeding behavior. Should each instance of speeding (e.g. a single sample) be treated as a separate offense, or should all consecutive speeding samples be treated as a single offense? Should the duration of time exceeding the speed limit be considered in the severity of the offense?

What would Robocop do?

Imagine a world where all violations of the law were enforced by a machine. There are some laws that most citizens would likely want 100 percent enforcement on, such as violent crimes. Assault is obviously a more serious crime than speeding, which is a more serious crime than jaywalking. Do we want to live in a world where jaywalkers are caught every single time?

“The law gives you wiggle room in the area of discretion after the fact,” Hartzog added. “There’s law on the books and law on the street.”

Beyond what an officer’s interpretation of the law might be, there are points in the American system of jurisprudence where humans can intervene and make a judgment call as to what crime likely occurred and what the appropriate sentence should be.

“But when you're talking about automated enforcement, all of the enforcement has to be put in before implementation of the law—you have to be able to predict different circumstances," he noted.

The study suggests that an automated, machine-driven system of law enforcement—even for something as simple as speeding tickets—may not be desirable.

The question arises, then: What is the societal cost of automated law enforcement, particularly when involving artificially-intelligent robotic systems unmediated by human judgment? Our tradition of jurisprudence rests, in large part, on the indispensable notion of human observation and consideration of those attendant circumstances that might call—or even mandate—mitigation, extenuation, or aggravation. When robots mediate in our stead either on the side of law enforcement or the defendant, whether for reasons of frugality, impartiality, or convenience—an essential component of our judicial system is, in essence, stymied. Synecdochically embodied by the judge, the jury, the court functionary, etc., the human component provides that necessary element of sensibility and empathy for a system that always, unfortunately, carries with it the potential of rote application, a lady justice whose blindfold ensures not noble objectivity but compassionless indifference.

reader comments

210